Every year, millions of sensitive records are leaked from Amazon S3. Misconfigured S3 buckets are some of the most common and widely targeted attack vectors; there’s an entire hacker cottage industry growing around finding and exploiting Amazon S3 buckets.

Why are Amazon S3 data leaks so common? Because all it takes is one or two clicks to upload sensitive data to the wrong bucket, or change permissions on a bucket from private to public. If you’re a large company with millions of S3 objects across multiple accounts, chances are very high that you already have an unsecured S3 bucket. The most common issues are:

– User uploads sensitive data to a public bucket, and doesn’t understand that all objects uploaded to a public bucket are public

– User creates an S3 bucket, modifies the default configuration, and then later upload data without first validating the configurations.

It only takes a few simple steps to prevent these attacks, and here are some steps you can take today to prevent data exposure:

1. Restrict access to creating/accessing Amazon S3 buckets with bucket policies

Let’s say we’ve created an Amazon S3 bucket named iamabucket. After creating the bucket, use bucket policies to deny any user from uploading objects to the bucket if they have public permissions. This prevents any user from uploading an object with public-read, public-read-write, or authenticated-read.

{ "Version": "2012-10-17", "Statement": [ { "Sid": "DenyPublicReadACL", "Effect": "Deny", "Principal": { "AWS": "*" }, "Action": [ "s3:PutObject", "s3:PutObjectAcl" ], "Resource": "arn:aws:s3:::iamabucket/*", "Condition": { "StringEquals": { "s3:x-amz-acl": [ "public-read", "public-read-write", "authenticated-read" ] } } }, { "Sid": "DenyPublicReadGrant", "Effect": "Deny", "Principal": { "AWS": "*" }, "Action": [ "s3:PutObject", "s3:PutObjectAcl" ], "Resource": "arn:aws:s3:::iamabucket/*", "Condition": { "StringLike": { "s3:x-amz-grant-read": [ "*http://acs.amazonaws.com/groups/global/AllUsers*", "*http://acs.amazonaws.com/groups/global/AuthenticatedUsers*" ] } } }, { "Sid": "DenyPublicListACL", "Effect": "Deny", "Principal": { "AWS": "*" }, "Action": "s3:PutBucketAcl", "Resource": "arn:aws:s3:::iamabucket", "Condition": { "StringEquals": { "s3:x-amz-acl": [ "public-read", "public-read-write", "authenticated-read" ] } } }, { "Sid": "DenyPublicListGrant", "Effect": "Deny", "Principal": { "AWS": "*" }, "Action": "s3:PutBucketAcl", "Resource": "arn:aws:s3:::iamabucket", "Condition": { "StringLike": { "s3:x-amz-grant-read": [ "*http://acs.amazonaws.com/groups/global/AllUsers*", "*http://acs.amazonaws.com/groups/global/AuthenticatedUsers*" ] } } } ] }

This prevents a user from accidentally uploading a public object to this bucket. You can actually enable this policy across multiple buckets with AWS CloudFormation.

2. Check S3 policies in AWS Trusted Advisor or S3 console

If you have a limited number of buckets and want to perform a manual check, you can now use AWS Trusted Advisor to perform S3 Bucket Permissions checks for free. It was previously only available to Business and Enterprise support customers.

1. Go to Trusted Advisor (under Management Tools)

2. Select “Security”

3. Once you click on Security, you’ll see a list of configurations with a yellow, green, or red next to each element. AWS Trusted Advisor checks buckets for:

- Does the bucket ACL allow List access for Authenticated Users?

- Does the bucket policy allow any access?

- Does the the bucket ACL allow Upload/Delete access for Authenticated Users?

4. You can also go to the S3 console and look through the list of buckets to ensure that proper configurations are maintained. Fair warning: while AWS cloud has greatly improved warning indicators, scanning the console alone is not enough to ensure that you don’t have unsecured resources.

3. Set up an alert if someone creates a publicly accessible S3 bucket in AWS Config

AWS Config is a service that allows you to assess and record resource configuration changes, through the use of rules that you define. You can use it to monitor Amazon S3 bucket ACLs and policies for any bucket that allows public read or write access. You can then trigger a SNS alert.

AWS has very comprehensive documentation on how to enable this. Go here to learn how to:

- Enable AWS Config to monitor your Amazon S3 buckets

- Configure S3 bucket monitoring rules (we recommend s3-bucket-public-read-prohibited and s3-public-public-write-prohibited by default)

- Choose an Amazon S3 bucket to store configuration history and snapshots

- Stream configuration changes and notifications to an SNS topic

- Create a subscription for your SNS topic (email or SMS)

- Verify that it works. If you create an unsecured bucket, AWS Config should look like this:

And you will get an email that looks like this:

4. Enable default encryption for S3 buckets

Default encryption for Amazon S3 buckets ensures that all objects are encrypted when stored in the bucket using server-side encryption with either an Amazon S3-managed key or KMS-managed key. We usually use KMS. By enabling default encryption, you don’t have to include encryption with every object upload request.

Enabling default encryption is literally a checkbox in the S3 console:

- Just choose the name of the bucket you want

- Choose properties

- Choose Default Encryption

- Choose either AES-256 or KMS.

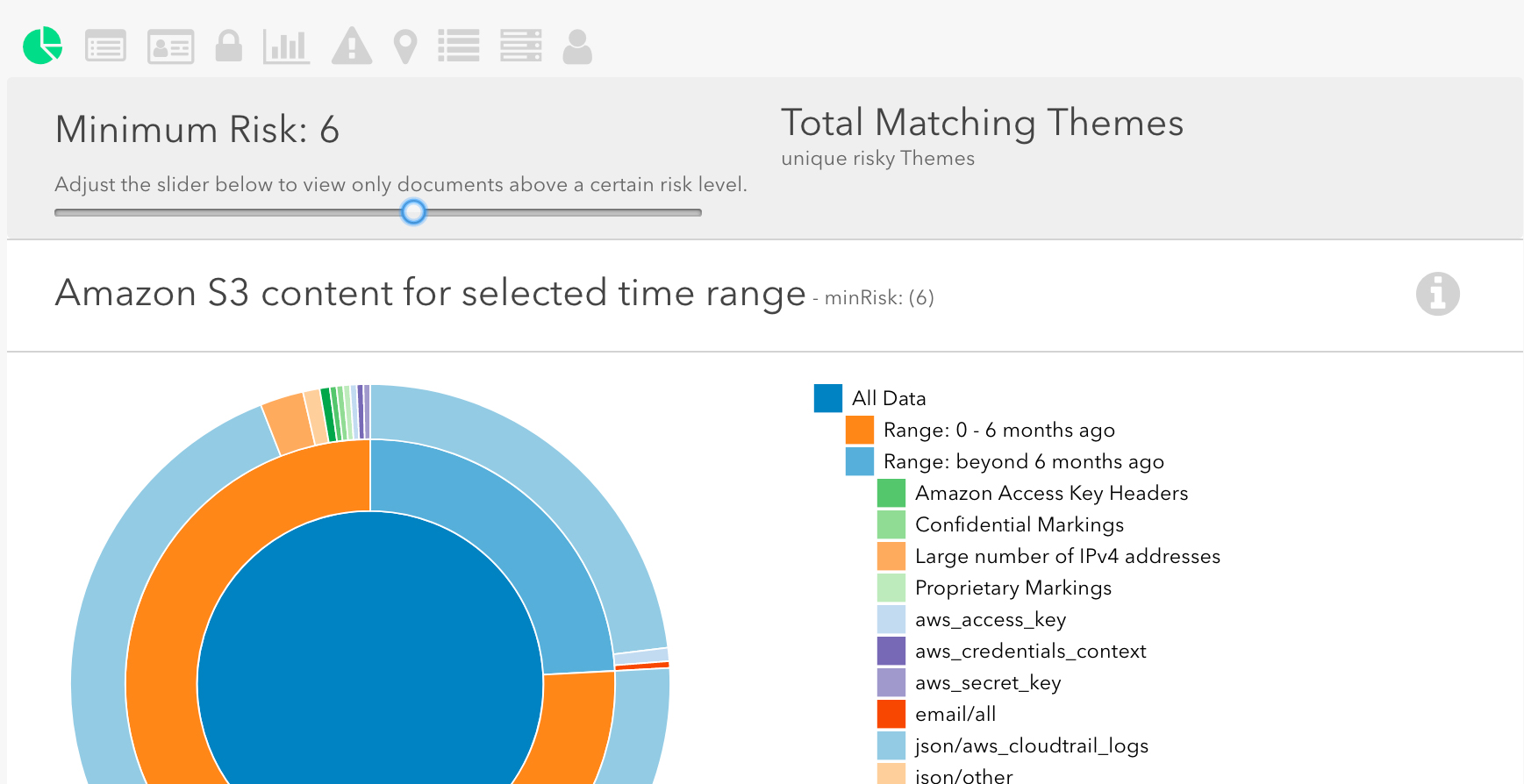

5. Enable Amazon Macie to monitor for PCI, PII, PHI data

Amazon Macie is a relatively new service that scans S3 buckets and uses machine learning to classify potentially sensitive data. It can also use User Behavior Analysis to detect suspicious activity. You can then configure a CloudWatch event to receive an alert about exposed data. Macie assigns a “risk level” to each potential vulnerability that it detects, and you can choose to only receive alerts beyond a certain risk level.

To enable Amazon Macie, follow these instructions. Note that the setup for Amazon Macie is actually quite complex, and Macie itself incurs a charge depending on the number of objects and buckets.

Macie is well worth the investment in time and resources, especially if you have cloud compliance requirements or store sensitive information in S3.

6. Use Logicworks Data Loss Prevention

For customers that want full protection against Amazon S3 data leaks, Logicworks has combined all of the strategies described in this service into our Data Loss Prevention service for Amazon S3. The service includes:

- Integrate Amazon Macie, other AWS cloud services, and custom scanners into a single dashboard

- Identify the most critical S3 buckets and configure Amazon Macie only for those buckets, saving you significant Macie costs

- Automatically quarantine S3 objects with a high risk level

- Make simple business decisions (such as “I want to be notified if any S3 objects in public buckets are unencrypted”), rather than setting up all the various services and permissions

- Easily set and change notification settings

If you want to learn more or get a demo of Logicworks Data Loss Prevention, go here.

Amazon S3 is secure by default, but user error makes S3 data leaks common. Every customer that we’ve introduced Logicworks Data Loss Prevention to has uncovered unexpected, unsecured buckets. By addressing AWS cloud security with a proactive approach, you can ensure that your customers’ sensitive data is never exposed.